threads instagram app

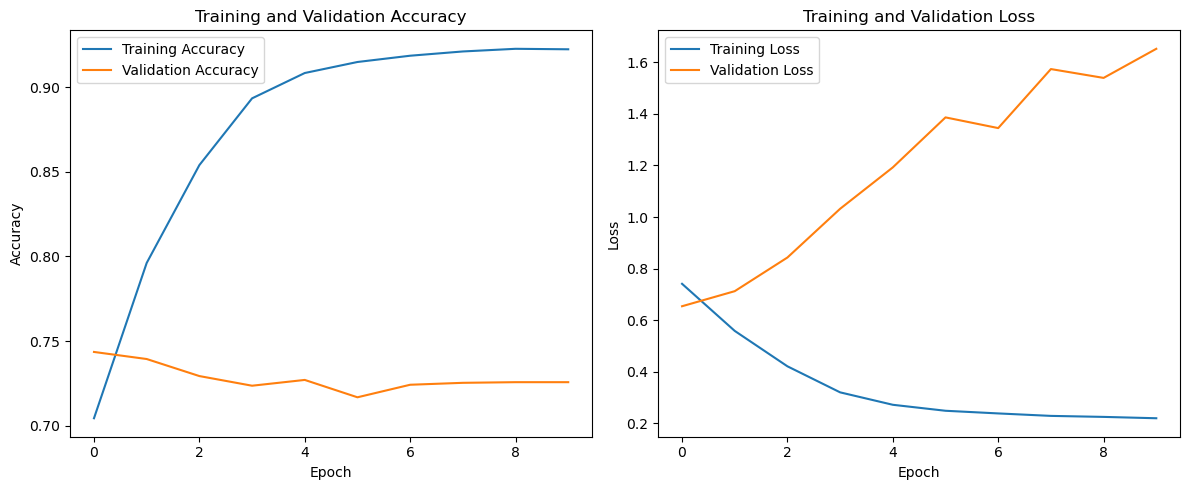

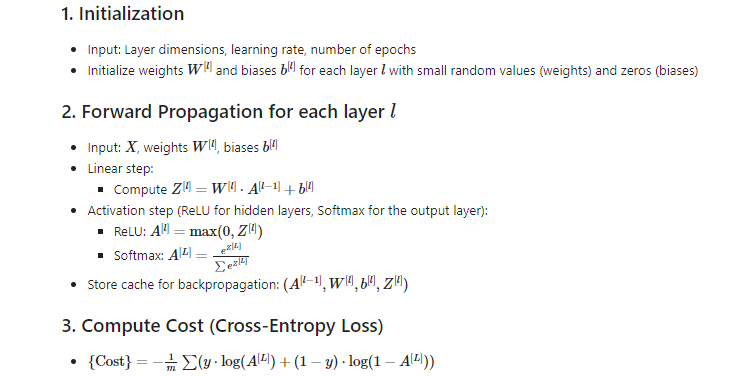

The project analyzes user reviews of the Threads Instagram app and develops initial predictive models, exploring different text processing techniques and machine learning models while identifying and addressing data imbalance and overfitting issues.

May 2023

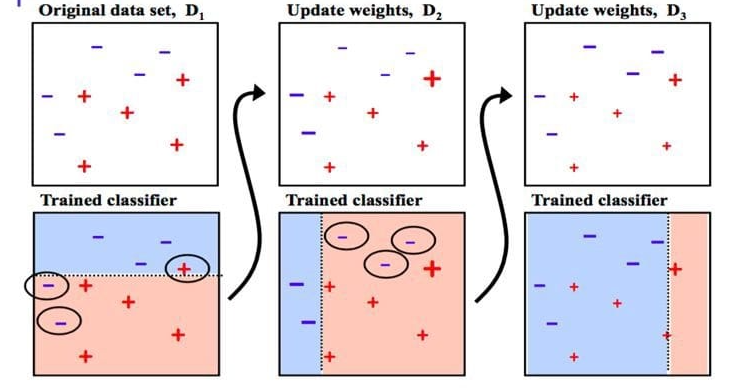

Jigsaw Multilingual Toxic Comment Classification

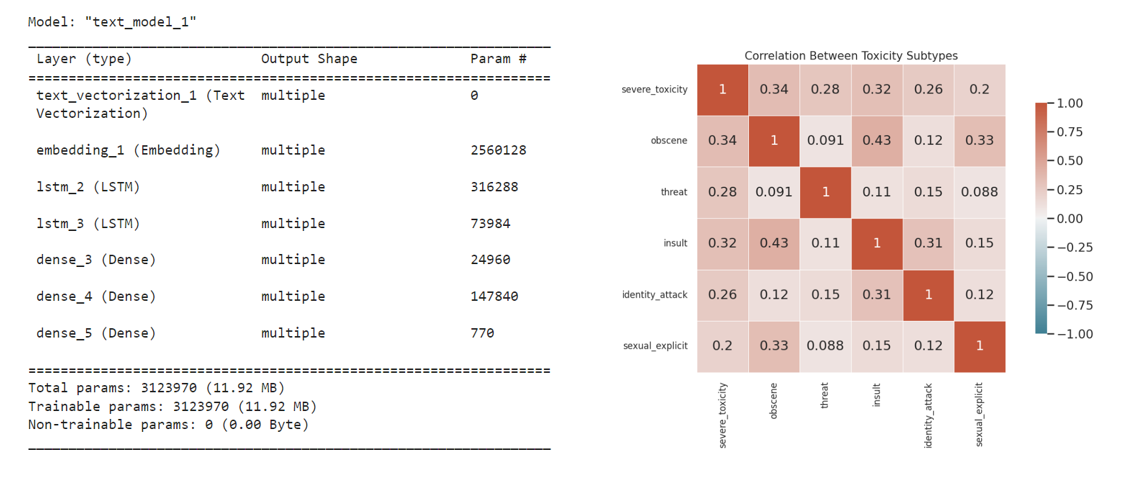

In this project, we developed multilingual machine learning models to classify toxicity in online conversations using English-only training data, with the goal of creating fair and effective tools to support healthier discussions across different languages.

November 2023

Walmart Data Sceience Project

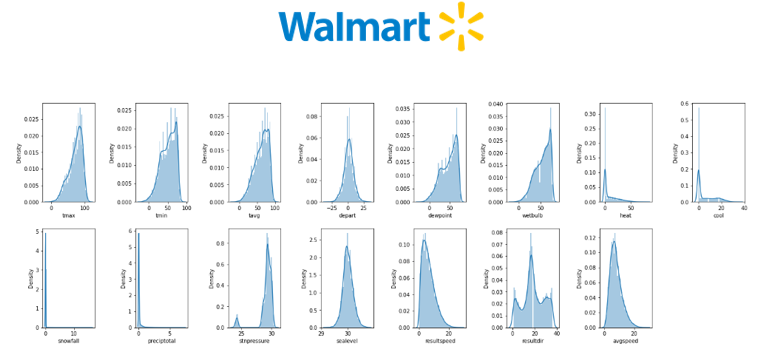

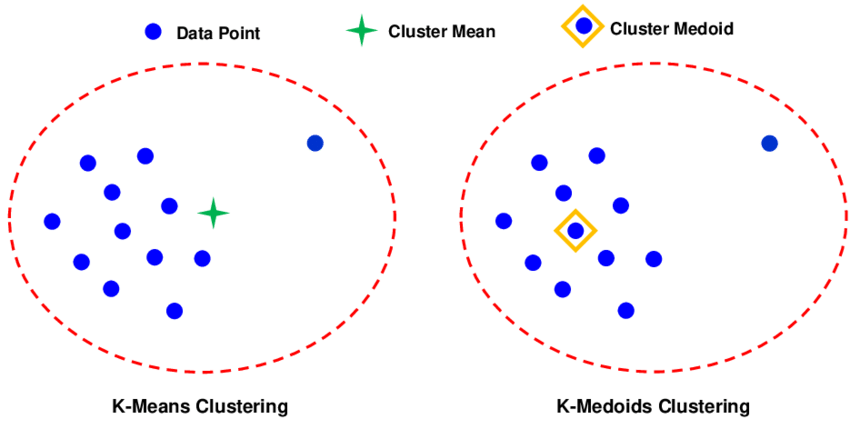

This project examines the effect of weather on Walmart sales by combining and analyzing sales and weather data, using clustering algorithms to group weather stations, and building a machine learning model to predict sales, ultimately aiding in optimizing inventory management and business strategies.

Apr 2022

Game Solving Using AI Algorithms

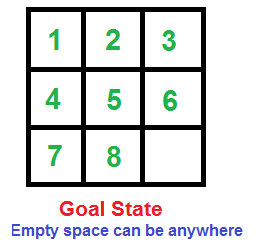

this project focus on solving the sliding puzzle game using three different search algorithms: Iterative Deepening, Breadth-First Search (BFS), and A* (A-Star). The goal of the project is to rearrange a given square of squares (3x3 or 4x4) into ascending order by switching adjacent squares vertically or horizontally.

May 2022

Knight's Move A Chess Inspired Game

Thi project is implemetation for a chess-inspired game called Knight's Move. The game incorporates agile development and software quality assurance principles to create a fun and engaging experience. The project is developed using Java and follows the Model-View-Controller (MVC) architectural pattern.

October 2022